Biological computing from Heinz von Foerster to the present: a tool for understanding intelligence?

Since the summer of 2005 the Brain Mind Institute together with IBM, the world's largest computer company, have been pursuing their unique and highly ambitious Blue Brain Project. The Project uses reverse engineering (RE) - a process of discovering the fundamental principles of a device or system by taking it apart in order to understand and reconstruct it. According to director Henry Markram, recent leaps in computer technology and the huge amount of neurophysiologic data accumulated over the last decades should help us realize the dream of building biologically accurate models of the brain from first principle to aid our understanding of brain function and dysfunction. As Markram reports his team has already succeeded in building a "highly biologically accurate" model of a neo-cortical column, which is considered to be the basic microstructure of the brain. As from now, he asserts, it will be only be a question of time and computational power to eventually create a computer simulation of the whole human brain with its 100 billion neurons.

"As from now, it will be only be a question of time and computational power to eventually create a computer simulation of the whole human brain with its 100 billion neurons" (Henry Markram)

It is indeed the immense calculating speed of IBM's latest supercomputer Blue Gene that has allowed Markram and his team to take their first steps. Pointing to the fact that modern computing has already revolutionized other disciplines of science by simulating "some of nature's most intimate processes with exquisite accuracy" Markram is certain that by exploiting the enormous computing power of Blue Gene, it will soon be possible to make a similar series of quantum leaps in neuroscience and simulate the brains of mammals with unprecedented biological accuracy.

Interestingly, IBM's supercomputer itself replicates the structure of biological systems: With its system-on-a-chip-design Blue Gene consists of up to 65,536 nodes that each incorporate all the elementary components of a comparably low-power computer in one integrated circuit. This distinguishes Blue Gene from its predecessors, which mostly relied on the conventional method of

sequential computation, where one or several high-power central processing units compute a series of instructions one at a time. By contrast, computation in Blue Gene is realized through the complex and collective interaction of a huge number of ?nodes? and not as a result of a linear series of numerical operations.

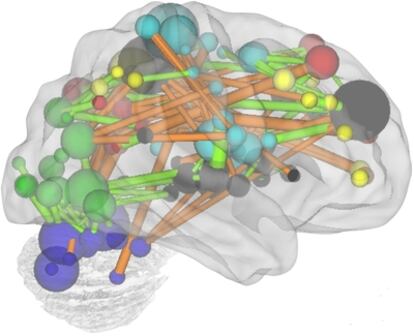

As Markram points out, since Blue Gene's processors "act like neurons, and connections between processors act as axons" a fundamentally different form of intelligence can be expected to emerge from Blue Gene, one that actually resembles biological intelligence rather than being a product of brute calculation speed. Within the Blue Brain Project, Blue Gene's biologically inspired computer architecture is used to give back a ?body? to the simulated brain: One virtual neuron is "mapped" onto each of Blue Gene's processors so that effectively "the entire Blue Gene is essentially converted into a neocortical microcircuit". In other words, a biologically inspired computer architecture is used to implement a software simulation of the human brain. Markram closes the circle when he announces that - apart from "completing the puzzle of the human brain" - Blue Brain will also in turn serve as a promising "circuit design platform" for revealing powerful circuit designs for future computer technologies.

This example from computational neuroscience and cutting edge super computing suggests that life science and computer science have finally converged: Apparently biological knowledge can be used to design more efficient computer architectures, which in turn can be used to generate more knowledge about complex biological phenomena by means of modeling and simulation.

To the philosopher, however, this relationship turns out to be highly problematic: What justifies the assumption that technology can be built from the 'blueprints' of nature and at what point do both hardware and software reach the limits of their ability to replicate biological design? And even more importantly: How does the process of modeling biological phenomena such as 'intelligence' by means of computational tools shape our understanding of nature and life? To answer epistemological questions like these it usually helps to look for early historic manifestations of a particular style of thinking, the different contexts from which it emerged and its conceptual capacities and constraints.

The aim of my research is thus to unearth discursive formations (technological, biological and institutional) that led to the belief in a general compatibility of computing technology and biology. Browsing through unedited archival material in Vienna and Urbana-Champaign about Heinz von Foerster's Biological Computer Laboratory (BCL) and conducting interviews with its former members I discovered that the idea of creating more biologically accurate computer architectures as an alternative to the conventional methods of serial computation has been around since the early days of computing history.

The Austrian-born physicist Heinz von Foerster established the Biological Computer Laboratory at the University of Illinois in Urbana-Champaign in 1958. It was intended to be an interdisciplinary research facility where research on cybernetics would lead to more efficient computer architectures and a better understanding of complex biological phenomena. Von Foerster had become acquainted with cybernetics shortly after immigrating to the United States in 1948. He joined an interdisciplinary group of scientists, who had been meeting regularly in New York City since 1946 to discuss problems of "circular causal and feedback mechanisms in biological and social systems". The participants of these famous Macy Conferences (1946-1953) - like the mathematician Norbert Wiener or the neurophysiologist Warren McCulloch - established their new approach of cybernetics in order to integrate different fields of scientific research through the analysis, application and design of universal principles of regulation and communication. One of the most controversial and important papers dealt with the physiological functioning and logical calculus of the human brain and asked whether a model of its inner communication processes could possibly serve as a blueprint for the design of computers: If a neuron could be described as performing elementary logical operations, then a nervous system could be regarded as a computer and a computer could be designed based on neural network logics.

Putting the cybernetic research agenda into practice, the experiments conducted at the Biological Computer Laboratory (BCL) involved the construction of analog machine models that were expected to eventually function according to the same 'mechanisms' that could be found and analyzed in the parallel neural structures of the brains and sensory systems of living organisms. In this sense the term 'biological computer' referred to both information processing 'devices' that could be observed in living creatures such as the eye, the ear or the brain and to the potentials of alternative computer architectures. What Heinz von Foerster was hoping for, was to achieve operational definitions of biological principles which he said included "self-organization" or "pattern recognition" that were assumed to structure the complex phenomena of intelligence or perception. To achieve this ambitious aim, von Foerster gathered a very heterogeneous international pool of renowned cyberneticians, biologists, neurophysiologists, mathematicians and philosophers, assisted in their work by young engineers from the University of Illinois' Department of Electrical Engineering.

Instead of using the now classic architecture introduced by John von Neumann in 1945 for a stored-program computer with processor, memory and input and output devices, Von Foerster described his projected machine as a decentralized network of interconnected elementary components that would display patterns of intelligent behavior through parallel, rather than sequential computation. Likening it to an earthworm that "with only 300 neurons can do remarkable things", he envisioned the biological computer's responding to external stimuli and adapting to its environment with a minimum of elementary units and processing speed. A basic prototype which he demonstrated to a journalist by the end of 1958 consisted of a complex pulse-modulated electrical circuitry composed of 168 "artificial neurons" and was able to "adapt" to its electrical surroundings: these elementary components allowed the network to "evolve" preferred paths in response to an electric input signal, so that the state of the automaton and the output signal depended upon the history of stimuli fed into the machine.

Heinz von Foerster's student Murray Babcock later expanded the prototype into a machine called an Adaptive Reorganizing Automaton. Babcock described it as an artificial neural network that could either be operated as an analogue computer or studied as a hardware simulation of complex neural behavior "similar to the interaction between a biologist and a living system" that allowed a better understanding of the functional connections between neurons. Until funding for cybernetic projects ceased in the early 1970s and the BCL was closed in 1974 several other prototypes were constructed, among them an artificial retina model called NumaRete, and an artificial ear called Dynamic Signal Analyzer.

The artifacts of the Biological Computer Laboratory constitute an excellent setting for a historical and epistemological analysis of the biological computer movement of the 1960s. Several lines of early research have recently reappeared because of renewed interest in parallel computation, neural networks or bio-informatics, as expressed in such ambitious and costly endeavors as IBM's Blue Brain Project. Back in the 1960s however, the transfer from biological knowledge to electrical engineering and vice versa didn't go as smoothly as expected. On the contrary, BCL engineers like Murray Babcock or Paul Weston were well aware that assembling their bio-cybernetic machines involved a great deal of tinkering, a little bit of patching up and sometimes even a hint of trickery. When I interviewed Weston he said that technology could never replicate the mechanisms of nature with biological accuracy even if their machines were able to duplicate certain aspects of biological performance. Nevertheless, as the contemporary example of the Blue Brain Project shows, finding bio-technological solutions for hardware and software that somehow make use of the complex but highly efficient and economical principles of nature always remained an intriguing idea that - as we have seen - still occupies the minds of leading scientist and computer engineers. That is why I am trying to outline the technological, intellectual and institutional contexts in which the Biological Computer Laboratory and Heinz von Foerster existed. My aim is to better understand the conditions in which this paradigm of a potential convergence of biology and (computing-)technology emerged and to track the particular formations of knowledge it produced.

Jan Klaus Mueggenburg, University of Vienna www.atomiumculture.eu

Tu suscripción se está usando en otro dispositivo

¿Quieres añadir otro usuario a tu suscripción?

Si continúas leyendo en este dispositivo, no se podrá leer en el otro.

FlechaTu suscripción se está usando en otro dispositivo y solo puedes acceder a EL PAÍS desde un dispositivo a la vez.

Si quieres compartir tu cuenta, cambia tu suscripción a la modalidad Premium, así podrás añadir otro usuario. Cada uno accederá con su propia cuenta de email, lo que os permitirá personalizar vuestra experiencia en EL PAÍS.

¿Tienes una suscripción de empresa? Accede aquí para contratar más cuentas.

En el caso de no saber quién está usando tu cuenta, te recomendamos cambiar tu contraseña aquí.

Si decides continuar compartiendo tu cuenta, este mensaje se mostrará en tu dispositivo y en el de la otra persona que está usando tu cuenta de forma indefinida, afectando a tu experiencia de lectura. Puedes consultar aquí los términos y condiciones de la suscripción digital.